Continuous development of the Front End Tier based on Grunt (node.js)

The development of the User Interface (Front End Tier) requires science and art to work together. To make it works, the process and tools are: Grunt continuous integration.

The Benefits:

Using Grunt as a continuous development tool for the front end tier I’ve Improved the quality:

- Using the best practices

- allowing to have metrics with static analysis on css, html & js

- support a BDD (Behavior Driving Development) with unit tests and code coverage of the JavaScript code.

Boost the productivity:

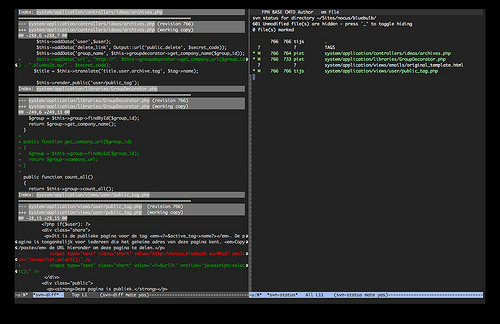

Development Process with Grunt

1) At any change in the code when saving the files, the files are compiled or processed

- Stylus -> css files

- CoffeScript -> JS Files

2) The validation tools are launched

3) The unit tests are launched

4) The files are copied to Development Web Server

5) The browser reloads the changes.

The plugins are:

CSS

- Stylus

- Css lint (static code quality analysis)

JavaScript

- CoffeeScript

- Jshint (static code quality analysis)

- Jasmine (unit tests)

- grunt-template-jasmine-istambul (code coverage

Html

- lint5 (static code quality analysis)

To handle the development process:

- Watch

- Connect (enables a webserver)

- Proxy-Connect (enables a proxy to invoke remote ajax calls)

To handle the delivery process

- htmlmin

- Copy

- cssmin

- regex-replace

- uglify

Unit Testing?

Much more better, I followed Behavior-Driven development process. The platform I used over Grunt is Jasmine. Why? It is simple to configure, runs on top of phantomjs browser engine, and enables to implement the code coverage.

Coding JavaScript?

Much more better, I used CoffeScript. The benefits are: better code quality, boost the productivity using the good parts of JavaScript. The generated code is 100% jslint clean code, improves the readability and the code needs to be compiled that guarantees the code is free of syntax and typos errors.

Lessons learned.

- Start always with a BDD: testing with Jasmine provides a better design, ensuring the testability of the code and using the best practices with MVC pattern on the frontend.

- CSSLint provide a great feedback to improve the quality of the css.

Next steps on my process:

- Integrate Grunt with a CI server (Jenkins).

- Integrate the metrics reports provided by Grunt on a historical dashboard: SonarQube

- Implement applications with I18N (internationalization).

Conclusion:

Using Grunt as a continuous development tool, developing the user interface the right process and tools, allows to improve the productivity and the quality. In less than 2 weeks, I implemented the process, I learned 2 new languages (Coffeescript & Stylus) and I deliver a great project. Don’t hesitate to start using Grunt continuous integration of the UI.

S3 Object Tagging is a new feature introduced by AWS on December 2016. A really nice feature that allows you to track your costs on your invoice by your tags.

S3 Object Tagging is a new feature introduced by AWS on December 2016. A really nice feature that allows you to track your costs on your invoice by your tags.